Understanding the Critical Need for Log Storage Optimization

In today’s data-driven landscape, organizations generate massive volumes of log data every second. From application logs and system events to security audit trails and user activity records, the exponential growth of digital information has created unprecedented challenges for storage infrastructure. High-volume log storage optimization has become a mission-critical requirement for enterprises seeking to maintain operational efficiency while controlling costs.

The complexity of modern IT environments, spanning cloud-native applications, microservices architectures, and hybrid infrastructure deployments, has amplified the need for sophisticated log management solutions. Organizations now face the daunting task of storing, indexing, and retrieving petabytes of log data while ensuring rapid access for troubleshooting, compliance, and analytics purposes.

Essential Criteria for Evaluating Log Storage Platforms

When selecting a platform for high-volume log storage optimization, several key factors must be considered to ensure optimal performance and cost-effectiveness. Scalability stands as the primary consideration, as platforms must seamlessly handle exponential data growth without compromising performance or reliability.

Performance and Throughput Capabilities

Modern log storage platforms must demonstrate exceptional ingestion rates, capable of processing millions of log entries per second. The ability to maintain consistent performance during peak load periods distinguishes enterprise-grade solutions from basic logging tools. Real-time indexing capabilities ensure that newly ingested data becomes immediately searchable, supporting rapid incident response and troubleshooting workflows.

Cost Optimization Features

Effective cost management involves multiple strategies, including intelligent data tiering, compression algorithms, and automated lifecycle policies. Leading platforms implement sophisticated algorithms to automatically migrate older data to more cost-effective storage tiers while maintaining accessibility for compliance and historical analysis requirements.

Leading Enterprise Platforms for Log Storage Optimization

Elasticsearch and the Elastic Stack

Elasticsearch has established itself as a cornerstone technology for log storage and analysis, offering exceptional search capabilities and horizontal scalability. The platform’s distributed architecture enables organizations to scale storage capacity and processing power independently, optimizing resource allocation based on specific workload requirements.

The Elastic Stack’s integration with Logstash and Kibana provides a comprehensive ecosystem for log collection, processing, and visualization. Advanced features such as index lifecycle management automatically transition data through different storage tiers, balancing performance requirements with cost considerations.

Splunk Enterprise and Splunk Cloud

Splunk’s platform excels in handling massive data volumes while providing powerful search and analytics capabilities. The solution’s proprietary indexing technology enables rapid data retrieval across petabyte-scale datasets, making it particularly suitable for organizations with stringent performance requirements.

Splunk’s SmartStore architecture introduces significant cost optimizations by separating compute and storage resources. This approach allows organizations to store historical data in cost-effective object storage while maintaining high-performance access to frequently queried information.

Amazon OpenSearch Service

As a managed service built on open-source Elasticsearch, Amazon OpenSearch Service eliminates the operational overhead associated with cluster management and scaling. The platform’s tight integration with AWS ecosystem services enables seamless data ingestion from various sources while leveraging cost-effective storage options.

The service’s auto-scaling capabilities automatically adjust cluster capacity based on workload demands, ensuring optimal performance while minimizing costs. Advanced features such as UltraWarm and Cold storage tiers provide significant cost reductions for long-term data retention scenarios.

Google Cloud Logging and BigQuery

Google Cloud’s logging infrastructure combines real-time log ingestion with powerful analytics capabilities through BigQuery integration. This approach enables organizations to perform complex analytical queries across massive log datasets while benefiting from Google’s global infrastructure and advanced machine learning capabilities.

The platform’s automatic scaling and pay-per-use pricing model makes it particularly attractive for organizations with variable log volumes. Integration with Google Cloud’s data pipeline tools enables sophisticated log processing and enrichment workflows.

Emerging Technologies and Innovation Trends

Object Storage Integration

Modern log storage platforms increasingly leverage object storage systems such as Amazon S3, Google Cloud Storage, and Azure Blob Storage for cost-effective long-term retention. This architectural approach separates hot data requiring immediate access from warm and cold data stored for compliance and historical analysis purposes.

Compression and Deduplication Advances

Next-generation compression algorithms specifically designed for log data can achieve compression ratios exceeding 10:1 while maintaining query performance. These technologies significantly reduce storage costs and improve data transfer efficiency across distributed environments.

Machine Learning-Powered Optimization

Artificial intelligence and machine learning technologies are revolutionizing log storage optimization through predictive analytics, automated anomaly detection, and intelligent data lifecycle management. These capabilities enable platforms to automatically optimize storage allocation and identify cost-saving opportunities without human intervention.

Implementation Best Practices and Strategic Considerations

Data Retention Policy Development

Establishing comprehensive data retention policies forms the foundation of effective log storage optimization. Organizations must balance compliance requirements, operational needs, and cost considerations when defining retention periods for different log types and data sources.

Implementing automated lifecycle policies ensures consistent application of retention rules while minimizing manual intervention. These policies should account for legal hold requirements, audit obligations, and business continuity needs.

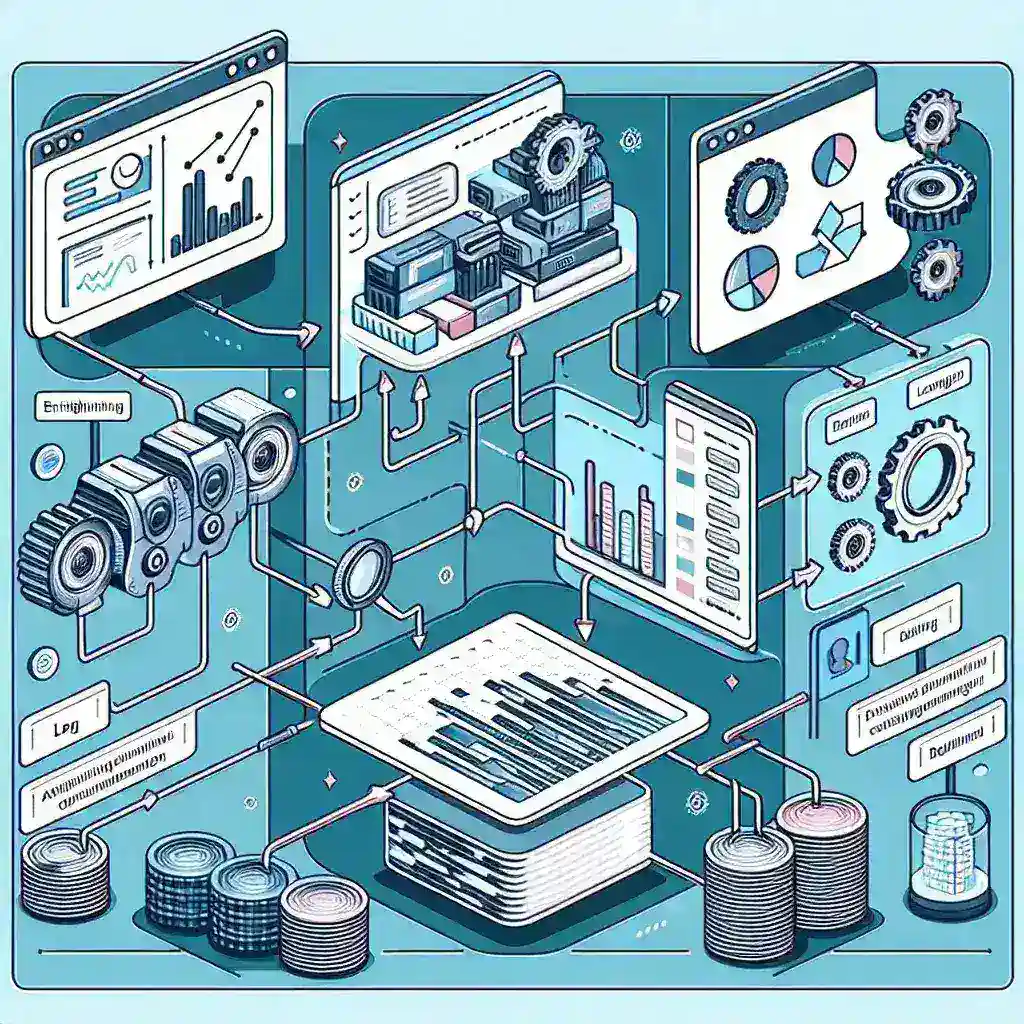

Multi-Tier Storage Architecture

Designing an effective multi-tier storage architecture involves categorizing log data based on access patterns, retention requirements, and business value. Hot tier storage provides immediate access for active troubleshooting and real-time monitoring, while warm and cold tiers offer cost-effective solutions for historical data and compliance archives.

Performance Monitoring and Optimization

Continuous monitoring of storage performance metrics enables proactive identification of optimization opportunities. Key performance indicators include ingestion rates, query response times, storage utilization efficiency, and cost per gigabyte stored.

Regular performance reviews should evaluate whether current storage configurations align with evolving business requirements and identify opportunities for cost optimization or performance enhancement.

Security and Compliance Considerations

High-volume log storage platforms must implement robust security measures to protect sensitive information while maintaining compliance with industry regulations. Encryption at rest and in transit ensures data protection throughout the storage lifecycle, while role-based access controls limit data exposure to authorized personnel.

Compliance frameworks such as GDPR, HIPAA, and SOX impose specific requirements for log data handling, retention, and deletion. Leading platforms provide built-in compliance features and audit trails to support regulatory requirements while maintaining operational efficiency.

Future Outlook and Technology Evolution

The log storage optimization landscape continues evolving rapidly, driven by increasing data volumes, advancing technology capabilities, and changing business requirements. Edge computing deployments are creating new challenges for distributed log collection and centralized storage, requiring innovative approaches to data aggregation and processing.

Serverless computing models are influencing log storage architecture design, emphasizing event-driven processing and consumption-based pricing models. These trends suggest a future where log storage platforms become increasingly intelligent, automated, and cost-effective.

Making the Right Platform Choice

Selecting the optimal platform for high-volume log storage optimization requires careful evaluation of current requirements, future growth projections, and total cost of ownership considerations. Organizations should conduct thorough proof-of-concept evaluations using representative data volumes and query patterns to validate platform capabilities.

The decision should account for factors beyond pure technical capabilities, including vendor support quality, ecosystem integration opportunities, and long-term technology roadmaps. Enterprise search capabilities often play a crucial role in maximizing the value derived from stored log data.

Success in log storage optimization ultimately depends on aligning platform capabilities with specific organizational needs while maintaining flexibility for future requirements evolution. By carefully evaluating available options and implementing best practices, organizations can achieve significant cost savings while improving operational efficiency and data accessibility.